Recreating Complex Journalistic Photos into 3D Scenes Using AI

Blog post description.

SPRING QUARTER 2025

4/30/2025

When a major disaster happens—like a hurricane or earthquake—photos are often the first way we understand what occurred. These images capture pain, loss, and damage, but they are still flat. They show only one angle and one moment in time.

Using artificial intelligence and 3D reconstruction, we now have the ability to take those real photos and turn them into detailed virtual environments. This method allows us to rebuild the original scene based on real details, such as the layout of buildings, broken structures, fallen trees, and debris on the ground.

By analyzing the image carefully and recreating it using 3D tools and AI, we can produce a virtual space that closely matches the real-world scene. This makes the news more immersive and helps people better understand the size and seriousness of the event. It also opens new possibilities for journalism, education, and even future crisis planning.

In short. This project aims to turn real journalistic photos into fully 3D environments using artificial intelligence (AI). Instead of using just flat images, we rebuild the scene in a way that viewers can explore and interact with. This process includes analyzing the original photo, regenerating missing parts with AI, creating 3D models and textures, and adding extra visual details such as debris and vehicles.

Real Photo

Step 1: Analyzing Real Journalistic Photos

To recreate a scene, we must first study the original news photo carefully. We focus on three main areas:

Environment

We examine the natural surroundings of the image:

Types of trees

Soil color

Terrain level (flat, rough, damaged)

Building Style

We identify the architectural style in the photo:

Shape of buildings

Type of construction (modern, traditional, damaged)

Details like doors, windows, and roofs

Auxiliary Details

We collect small but important objects in the scene:

Wires, poles, signs, rubble, etc.

Elements that help complete the photo's visual story

Introduction

AI Generated Image

Step 2: Regenerating AI-Based 2D Images

After analysis, we use AI tools to fix and extend the original images. This includes:

Cleaning damaged or unclear parts

Creating new angles or perspectives using AI

Using generative models to imagine the full scene

This helps us prepare better input for the 3D modeling phase.

Step 3: Creating 3D Objects and Geometry

In this stage, we build 3D models based on the processed images.

Generating 3D Objects

Using depth estimation and AI-based modeling:

We recreate buildings, streets, rubble, and other major shapes

Tools like Blender, Meshroom, or AI plugins help automate this

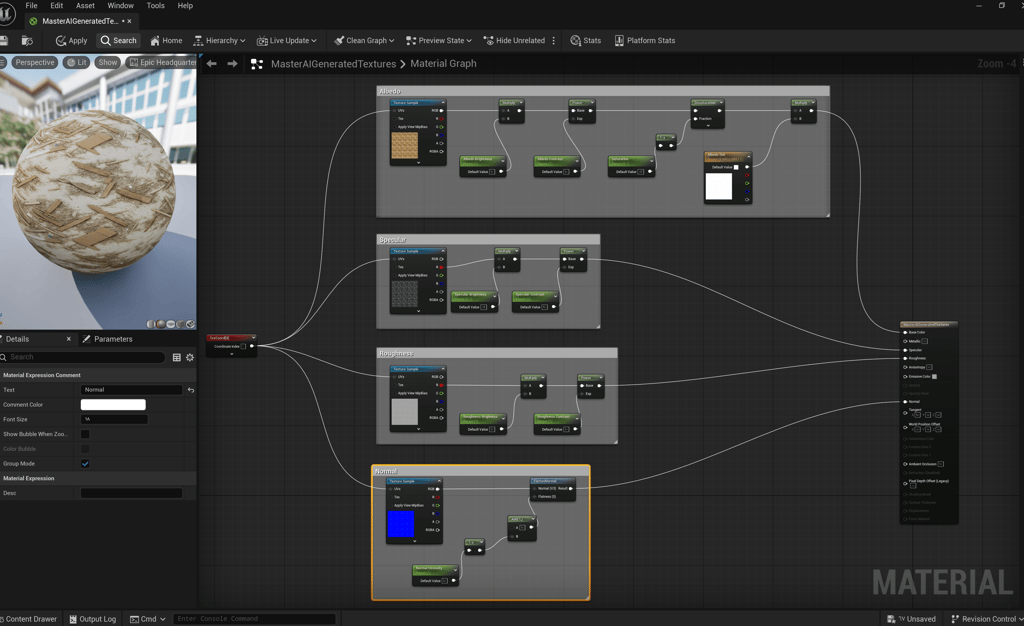

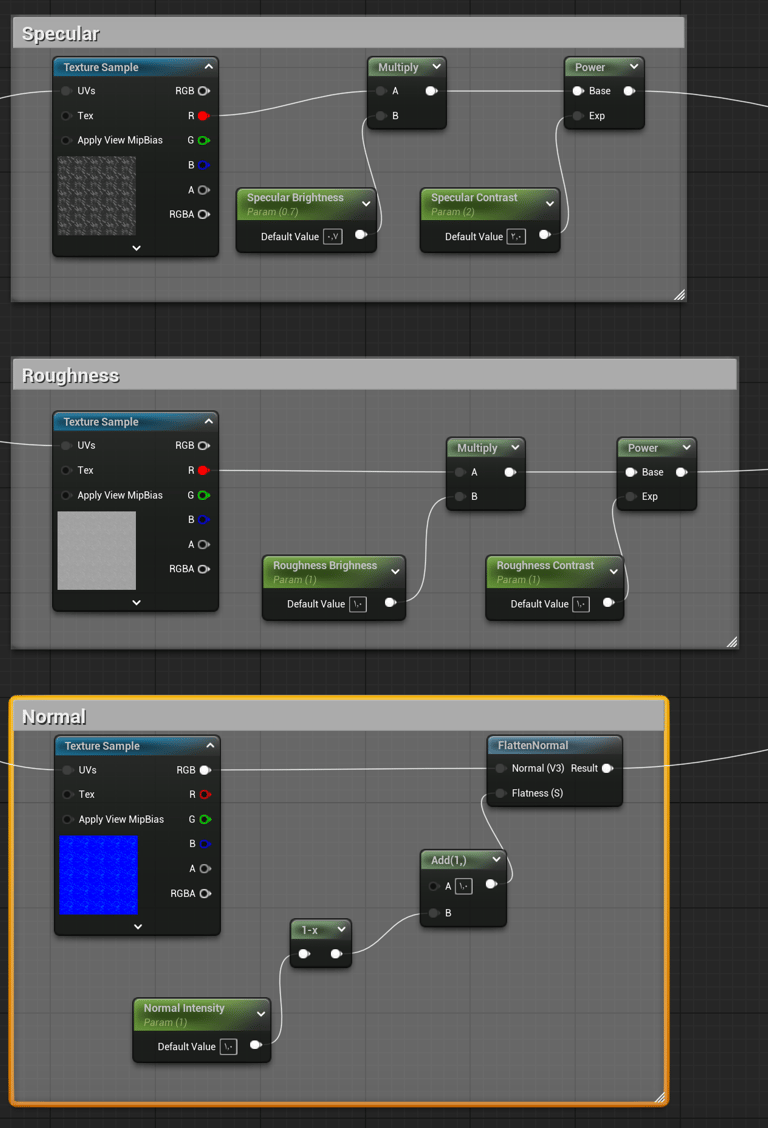

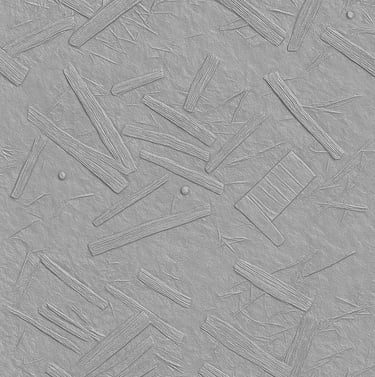

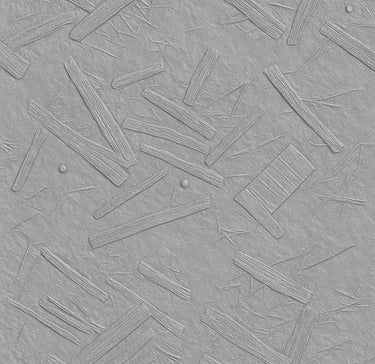

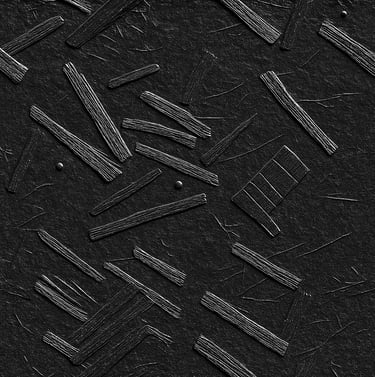

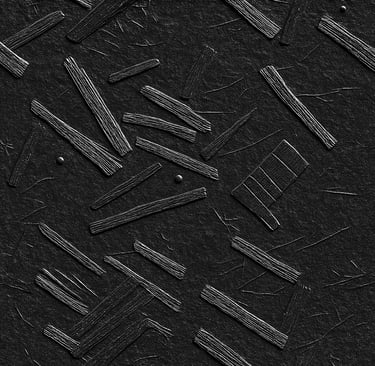

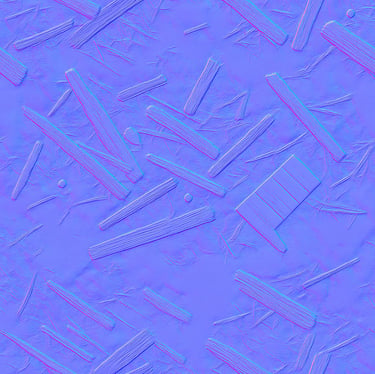

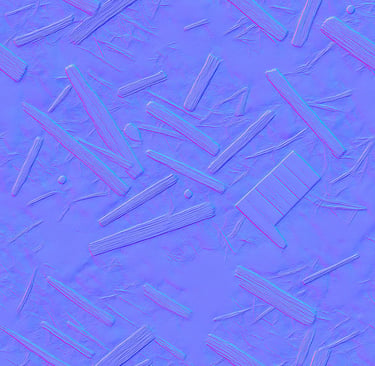

Step 4: Generating Materials and Textures

Textures make the scene look realistic. We use AI to generate:

Base color maps

Roughness and normal maps (for realism)

Materials that match the environment (e.g., concrete, wood, soil)

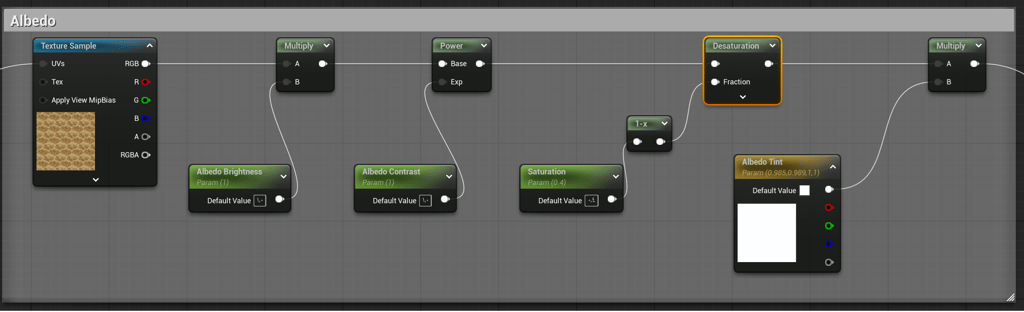

Material Nodes Details

Step 5: Importing Extra Details

To complete the scene, we add small but meaningful 3D assets.

3D Scans

We import photogrammetry assets or scanned debris to match real objects in the photo.

Matching Trees

We add tree models that are similar in shape and type to the ones shown in the original image.

Cars and Urban Objects

We place cars, traffic signs, paper trash, and other small elements to make the scene feel real and complete.