Testing AI Motion Capture: Variables, Methods & Expected Outcomes (Part 2)

Testing AI Motion Capture: Variables, Methods & Expected Outcomes (Part 2)

Methodological Framework

This continuation of the technical research on AI-powered motion capture systems outlines the testing framework implemented for comprehensive evaluation. The methodology incorporates a structured approach to variable manipulation and performance assessment.

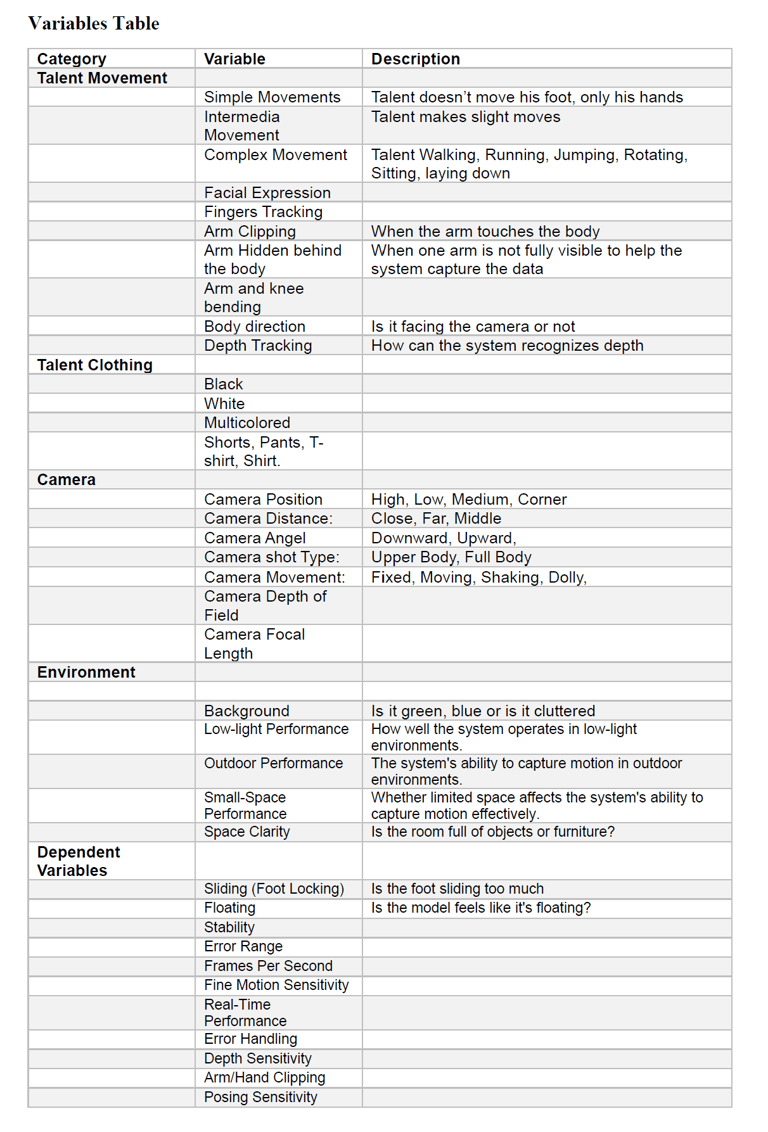

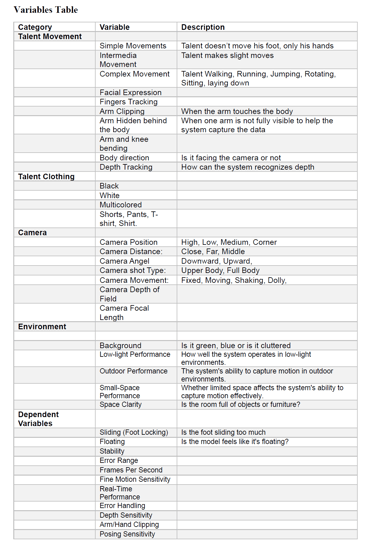

Variable Classification

The research design incorporates both independent variables (controlled inputs) and dependent variables (measured outputs) to facilitate systematic evaluation.

Independent Variables

1. Talent Movement Variables

Simple Movement Patterns: Isolated hand gestures with stationary lower body positioning

Intermediate Movement Complexity: Moderate body movements with limited spatial displacement

Complex Movement Sequences: Walking, running, jumping, rotational movements

Specialized Tracking Elements: Facial expression capture, finger movement precision

Occlusion Scenarios: Arm-body intersection, partial limb visibility, varied body orientations

2. Talent Clothing Variables

Chromatic Variables: Black, white, and multi-colored apparel

Garment Configuration: Various clothing styles including shorts, pants, t-shirts, and formal shirts

3. Camera Configuration Variables

Positional Placement: High, low, medium, and corner mounting positions

Distance Metrics: Close, intermediate, and distant camera positioning

Angular Orientation: Downward, upward, and neutral angles

Framing Parameters: Upper body isolation versus full-body capture

Stability Factors: Static, mobile, and deliberate instability conditions

4. Environmental Variables

Background Composition: Chroma key surfaces versus visually complex environments

Illumination Conditions: Optimal, moderate, and suboptimal lighting

Spatial Context: Indoor versus outdoor environmental settings

Volumetric Constraints: Spacious versus restricted capture areas

Dependent Variables

The research measures multiple performance indicators including:

Movement Fidelity Metrics: Foot sliding/locking phenomena, gravitational adherence, stability measurements

Technical Performance Parameters: Error range quantification, frame rate consistency

Detail Resolution Capability: Fine motion detection thresholds, spatial depth perception

Operational Functionality: Real-time processing performance, error management protocols

Technical Limitations: Limb intersection handling, pose recognition sensitivity

Methodological Procedure

The research implementation follows a four-phase protocol:

Environmental Configuration: Standardized setup of AI motion capture systems in controlled environments

Systematic Testing: Data acquisition across all variable permutations

Quantitative Assessment: Methodical measurement of dependent variables

Statistical Analysis: Application of analytical tools to identify statistical significance and correlation patterns

Anticipated Research Outcomes

This investigation is expected to yield:

Comprehensive technical profiles of evaluated AI motion capture systems

Identification of performance differentials across varied application scenarios

Quantification of artificial intelligence contributions to motion capture enhancement

Determination of critical variables affecting performance optimization

Research Implications

The findings from this research will provide valuable insights for technical implementation in animation, interactive media development, virtual reality applications, and biomechanical analysis. The research outcomes will facilitate:

Evidence-based system selection aligned with specific technical requirements

Environmental and configurational optimization

Enhanced understanding of technological constraints

Informed capital investment in motion capture infrastructure